by Adam Schadle Issue 86 - February 2014 1. With the need to ingest and process video from a number of sources, broadcasters are faced with a multifaceted need to handle all types of formats including 4K. What is your multi-resolution strategy?

With the emergence of new compression standards such as High-Efficiency Video Compression (HEVC), which offers a potential bit-rate reduction of up to 50 percent over H.264, 4K services are looming on the horizon. Broadcasters and service providers are now researching the technology requirements of delivering 4K content to a wide range of services: real-time TV channels delivered through cable IPTV or satellite, file-based delivery of VOD content, and streaming content over the Internet. The ability to test and analyze video quality remains as critical as ever, but the task will be complicated by efficient encoding standards that enable additional channels over the same or smaller data rates.

At Video Clarity, weve always been proactive about delivering video quality testing and analysis tools to support any current and emerging formats our clients require, and 4K is no exception. Our core capabilities are based on the notion that testing and QC must take place not only after the content goes live, but before, with testing tools that can ingest video from any source and then play it back in every resolution for delivery to every screen. 2. When dealing with 4K files, streams, and uncompressed video, what capabilities need to be present in a testing solution?

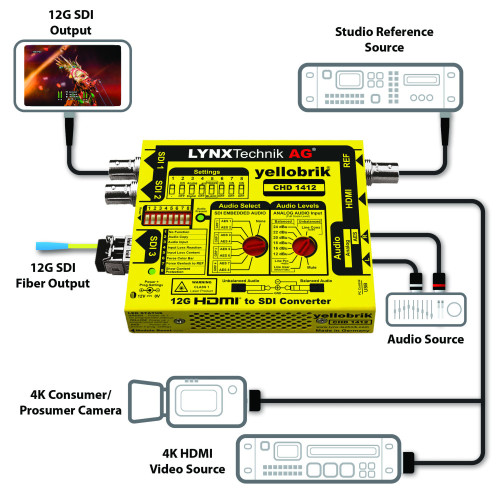

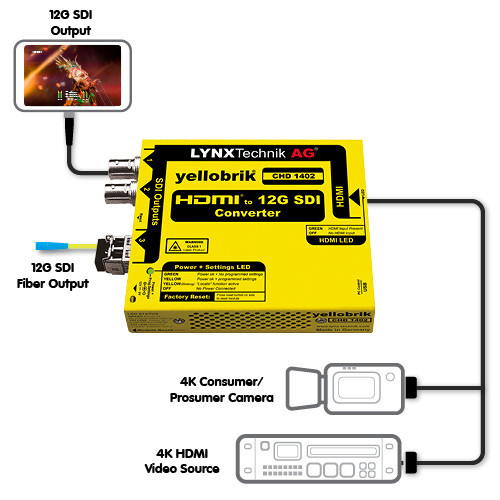

The most important capability is the ability to ingest, record in 4K resolution, and test content from any compressed or uncompressed video source using todays SDI-type interfaces. This is including signals coming from IP network, file, or uncompressed video infrastructure feeds.This also includes files from the editing suite, such as multiple frames of uncompressed sequences of any length, or lightly compressed mezzanine-level files. A secondary requirement is the ability to decode any encoded file in the current MPEG or JPEG standards as well as emerging encoding standards such as HEVC. Finally, the system must be able to play back the content in every resolution required for delivery to every screen, from UHDTV and HDTV to mobile device resolutions.

Video Clarity provides the testing and monitoring foundation that can handle a large array of original file formats, with the ability to record and play back content up to 60Hz. Its what we do and do well.

3. What types of streams are being propagated in current delivery networks that need to be part of the type of testing a broadcaster/network might do?

In todays typical delivery network, a media operation might receive an original uncompressed stream from a satellite or an existing network, or a file from a post-production process. The operation then needs to be able to output these adaptive streams as a number of different profiles, in different bit rates to support any number of platforms including high bit rates for HDTV. Although some of these streams might consist of SD content, theyre now primarily high-definition pictures that must be decoded on the fly, recorded, ingested for QC testing, and encoded using the compression codecs required by the target devices. During the testing phase, the system must provide an automated means of testing a number of key attributes against industry-adopted standards. These include video quality, audio quality, lip sync, loudness, and ancillary content. 4. What are the primary resolutions that they have to deal with?

As we said, there is still a certain amount of SD content in the broadcast chain. However, most tools that have practical application in todays media operations are focused on prevailing HD formats, while also including support for SD. We design our testing solutions around four key resolution levels. At the bottom are the streaming formats for handheld devices and PCs, and just above that are todays most active broadcast-level HD formats, including 720p or 1080i. A step up from that is 1080p 50- or 60-frame rate, a format thats often required for a live event thats being transmitted over a dedicated network, in-production dailies, or an HD camera feed that needs to be transmitted for production purposes. Then above that is the 4K level.

All of these resolutions are included in every tool we make. In addition, we maintain a wide variety of input and output capabilities to enable customers to test individual devices and then place them into a test network architecture, either at a live broadcasting plant, a content delivery network, or in the lab of the products manufacturer/developer.

5. Considering that audio requires more types of testing than video, what type of audio measurement is required?

In general, weve found that our customers require three key audio measurements. The first is perceptual quality, which simulates a human perception test and creates a measurement that is as close as possible to an actual subjective study done in a standardized environment. An important benchmark for this is Perceptual Evaluation of Audio Quality (PEAQ), an ITU standard that enables the most accurate audio quality measurement in the industry today. Our products enable this testing by establishing a PEAQ record documenting the top-end perceptual quality level.

Next, we offer audio performance measurements in our real-time systems that identify performance issues with audio devices or audio in the network chain. These tests enable operators to find audio faults such as silences and glitches, and the testing system logs the failure and simultaneously records the offending video in a real-time test session. Audio performance testing also covers lip sync measurements with millisecond accuracy using the same logging and time-stamped recording function that captured the out-of-specification portion the A/V signal.

The third area is a test for audio loudness, which has become extremely important with the adoption of global loudness standards. Effective loudness testing involves applying loudness standards to every individual audio channel and reporting a measurement for a program as a group. We also offer a true-peak audio measurement as part of the loudness testing. 6. What are the differences between real time and non-real time testing?

There are fundamental differences between the two. Real-time testing involves measuring performance over time of an audio and video signal as it goes through a device or over the air. With our solutions, an operator can monitor a signal according to certain test parameters and then determine its performance over time. We provide a reference signal and compare it to the downstream version, and then provide a very accurate measurement of video or audio quality without having to use invasive techniques such as placing tones or markers in the audio. We have also developed an exclusive technique to perform non-invasive lip-sync measurements, and we provide real-time loudness testing. When an event takes place outside of a set threshold that was predefined by the operator, our system records the stream in question for later review and creates a log of the failure measurement that is associated with the recording.

Non-real-time quality testing is a repetitive process that is useful for fine-tuning a device codec design, setting or network path to achieve a desired level of quality. Typically, it involves recording sets of audio and video content that have certain error-prone characteristics, such as high motion, and then putting them repeatedly through a testing process and measuring the quality against a certain variable, such as encoding bit rate. In this manner, the operator can reset or revise the variable to determine the optimal setting of a given device for delivery to a given end-user device.

4k testing in todays world

Author: Adam Schadle

Published 1st March 2014