Digital media monitoring

Author: Bob Pank#

Published 1st July 2011

What is digital media monitoring?

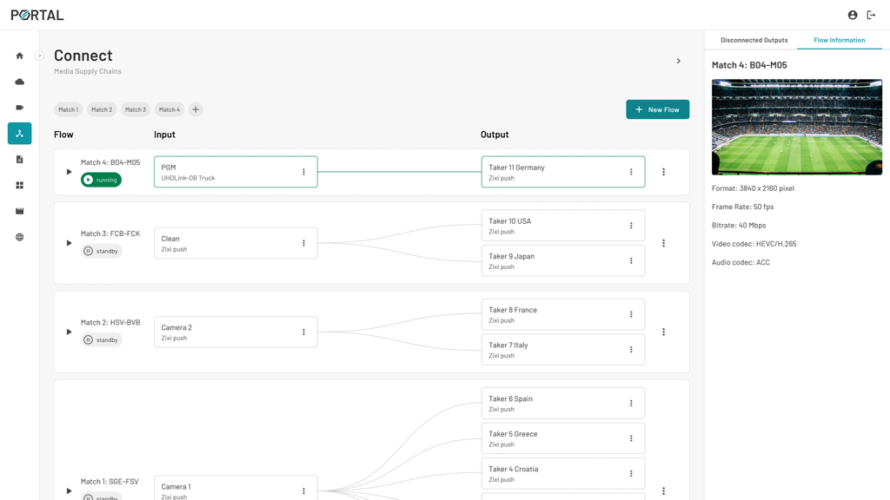

Most media delivery chains now include a combination of IP and broadcast stages, regardless of the viewing platform of the end user. This combination of technologies into a hybrid delivery chain has thrown up new complications in terms of monitoring and analysis. Before the introduction of IP technologies into media delivery applications, operators could rely on conventional broadcast monitoring tools alone. As IP became more common, IP-specific data monitoring tools were added alongside the broadcast tools, at appropriate parts of the delivery chain. The broadcast monitoring tools gave engineers a view of the signal quality during the broadcast sections, and the IP tools gave a separate view of the data as it passed through the IP sections. By contrast, a digital media monitoring system is purpose-designed to give engineers one coherent view of the data along the entire chain, ‘seeing’ the data at all points, whether in the broadcast or IP domains, and offering a clear end-to-end correlation and analysis across the domains.

Who needs a digital media monitoring system?

Anyone operating a hybrid delivery chain or delivering media through IP networks, in any application, whether that’s contribution of live action from a sports stadium, a full-service quad-play operation, or an IPTV service to hotel or residential developments.

Why aren’t conventional broadcast and IP monitoring tools adequate for these applications?

Some media operators have tried to build monitoring capability using a patchwork of conventional broadcast monitoring tools and IP tools. But this approach has serious disadvantages: the complexity of a hybrid digital media delivery chain means that the interaction between broadcast and IP domains can introduce errors into the signal, which may only become apparent further down the delivery chain. Conventional monitoring tools can detect these errors, but cannot usually see back up the chain to where the root cause occurred. Similarly, irregularities in the signal at any one stage may be within acceptable parameters at that stage – and therefore go undetected as ‘errors’ by the monitoring device deployed there – while still causing problems downstream. The result is that the true cause of errors takes longer to analyze and correct, leading to increased cost and reduced quality of service.

What sort of errors would be difficult to resolve with this approach?

Because of the much increased complexity involved in providing for example a cable triple-play or quad-play service, there are many potential sources of faults. But a simple example would be timing errors: jitter does not necessarily in itself result in a visible defect but can lead to packet loss downstream. Each component in the transmission chain that receives video in IP packets will need to inspect and perform some function on each packet or its contents. For a STB this means decoding and displaying. For a network switch or router this means determining the ‘next hop’ for the IP packet. For an edge QAM encoder this means terminating the IP layer and constructing a transport stream for transmission over the HFC. In all cases this requires some level of buffering and this creates a source and problem with network induced jitter. Buffer underflows or overflows that result from IP packet spacing that is not closely matched to the playout timing encoded in the transport stream will result either in immediate packet loss or increased jitter further downstream that is more likely to result in packet loss. Packet loss is the symptom, but the cause is the jitter from upstream, which the engineer will find difficult to track down without a network-wide correlated view of the data.

So how does a purpose-designed digital media monitoring system do the job better?

A system that’s purpose-designed for hybrid IP/broadcast delivery chains gives a completely transparent view of the data in both broadcast and IP domains, with a completely correlated analysis of data integrity as it passes down the chain. This means that if an error is reported halfway along the chain, but the root cause lies further upstream, the system presents intelligently correlated analysis for the whole data path and engineers can quickly find get to the cause. A purpose-designed system should also provide a more nuanced interpretation of the data, so that it helps identify conditions which are likely to cause errors at stages further down the chain, even when errors are not being reported at the source.

What is ‘end-to-end monitoring’, and is why is it important?

End-to-end monitoring means the ability to see and analyze the data at all points from one end of the delivery chain – typically the ingest from the satellite – right through to the delivery point – typically the set top box and the viewer’s screen. Only a specifically-designed digital media monitoring system can deliver this, with a range of media probes for every key point in the delivery chain, including the set top box. A true end-to-end system provides monitoring and analysis of the quality of the data coming off the satellite feed (something many operators neglect to monitor), right through to deep packet analytics of the quality of service experienced at an individual subscriber’s STB. It can not only analyze the transport stream, but can also monitor all the conditions that can disrupt broadcast services, such as loss of audio or picture, and it can provide analysis of conditional access system performance, electronic program guide, and over the top services.