Working With Audio Signals

Author: Kieron Seth#

Published 1st June 2011

For most of the past century, working with audio signals was a very straightforward process. The audio information was converted to electrical signals in the form of a varying voltage between the two conductors in a pair (balanced) or between a conductor and the ground reference (unbalanced). The bandwidth, frequency response, distortion and noise requirements of audio circuits were all well-defined. Interconnecting audio equipment was a simple matter of matching impedance and levels, usually accomplished with simple circuits of passive devices.

The conversion to digital signal formats changed all of that. Beginning in 1985 with the publication of the first standards for what became known and AES/EBU digital audio, system designers were faced with an ever-growing number of complications. Digital audio consists of packets of data, each packet representing a voltage level derived by “sampling” the analog voltage of the input signal at a specific rate and converting that value to a digital value. At the decoder, these samples are sequentially converted back to their analog value, and the analog signal (or a reasonable facsimile thereof) is reconstructed. Because it is always preferable to avoid unnecessary decode-recode steps along the way, system designers needed to find ways of working with these digital signals in their native form. This is where the complications started to arise.

The first complication came with the need to switch between two signals or to mix two signals together. In the analog world, this was simple. A basic relay could do the switching, and a very simple circuit could do the mixing by adding the two signals together in a variable ratio. In the digital world, it became necessary to ensure first that the two signals were encoded at the same sample rate (several different rates are used), and then that they were synchronous with each other. Switching between two signals that are not locked to the same reference makes it impossible to switch on the boundary between the samples. The outcome is an invalid sample at the switch point, which results in a pop or click at the output device when the signal is decoded.

Even with timing problems taken care of, noise at the switch point remained a common issue. This was due to the fact that the digital audio signal is constructed of discrete samples. When a switch takes place, there is often an instantaneous difference in the values represented by the samples immediately preceding and immediately following the switch. When the signal is converted back to analog, this discontinuity cannot be tracked by the analog circuitry and the speaker that is presenting the audio. The result is noise at the switch point. This noise can be prevented through adjustment of the samples before and after the switch point so that the two signals are at similar levels when the switch takes place. This is often called a “soft” switch and is quite commonly used, but it is a long way from the relay described earlier.

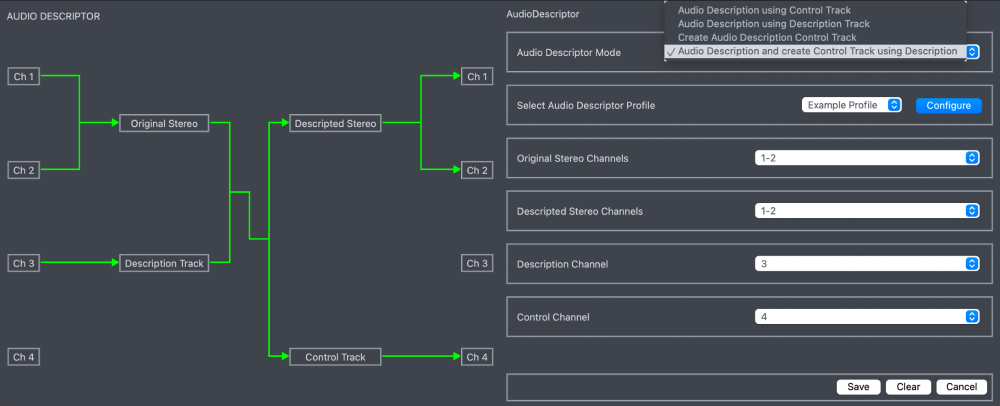

After a few years, system designers finally had a full toolkit for implementing practical, reliable and cost-effective digital audio systems. Then the complications started all over again. The challenge this time was called “embedded audio.” When the Serial Digital Video signal format was standardized, there were provisions made for carrying “ancillary data” in the spaces where no active video was present. When this data came in the form of digital audio information, it was referred to as “embedded audio.” The advantages of combining audio and video signals into one transport layer are obvious. System complexity and cost can be reduced considerably by eliminating the need for audio cabling, patch panels, and distribution and monitoring equipment.

The price of this improvement is increased complexity in the video system, or reduced flexibility in handling the audio once it is multiplexed into the video stream. Consider the common problem of recorded material with audio tracks assigned to the wrong positions. In a separate audio system, it is easy to repatch the audio lines to the correct pattern and proceed.

Performing the same operation on material with embedded audio requires that the serial data be decoded, the audio data extracted and processed and then the resulting material re-encoded back to SDI for use in the system. The difference in cost is considerable and must be taken into account when designing a system that depends on embedded audio transport rather than traditional separate audio system design.