Thanks for the memory

Author: Bob Pank#

Published 1st July 2011

If I need to impress on someone just how old I really am, I explain that my first computer had 32kB of Ram. Then we go through the “you mean 32 meg” “no, I mean 32 k” routine. It does seem implausible that, while I managed to write letters on my BBC Model B, today I need half a million times the memory to bash out columns for TV Bay.

This is, of course, largely Fred Moore being right again. If the density of components doubles every 18 months we could assume that memory will do the same. Given that my current computer is two years old, I was maybe five years ahead of the curve when I specified 16GB, no more.

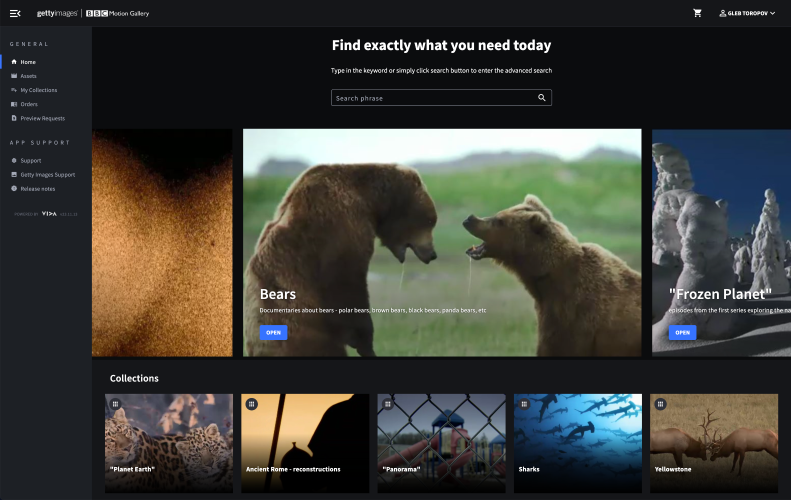

One of the reasons I started musing on memory was the recent news reports that the BBC has resurrected the Domesday Project of 1986, and put the content online. Domesday was an amazing piece of forward thinking, both in terms of content and technology.

No-one had thought of the term “crowd sourcing” 25 years ago, but that was at the heart of Domesday. Anyone who wanted to contribute, in whatever form, was invited, with the intention that, overall, it would be an accurate picture of what the British people thought of their country at the time.

The crowd source concept was not easy for some to grasp. Being tangentially involved with the project I was at the press launch where (to my shame) I got irked by a Daily Mirror reporter who dismissed the whole thing out of hand because there was nothing about Margaret Thatcher on it. She simply could not understand that if there was nothing about Thatcher it was because no-one cared about her.

All this multimedia content was held on a souped-up BBC B with two laser discs. I seem to remember that one was a constant linear velocity disc and the other was constant angular velocity, and even understanding why. Long gone.

That was the project’s downfall, sadly. The content was great, and the ability to link different elements to research what you wanted was fascinating, but the hardware was just too expensive. One principal was that every library in the country should have one, but back in those days librarians clung to the quaint belief that their budgets should go on books.

Today, of course, nobody thinks about the cost of storage. My grossly over-specified desktop computer has four one terabyte disk drives because it has four disk slots and terabyte drives were small change at the time. Even using one pair to back the other up, I am not going to run out of space any time soon.

I am currently working on a project with IABM, the body that represents manufacturers in our industry, and the very nice people at research company Screen Digest. I hope to be able to share some of this with you soon. In the meantime, though, I have been looking at some of Screen Digest’s fascinating data.

According to them, it was back in 2006 that it became cheaper to store an hour of broadcast quality video as a digital file on disk rather than on tape. Since then the cost of tape has remained more or less constant, which is what you would expect of a mature product with fixed manufacturing costs.

But the cost of data storage on disk has continued to plummet. In 1995 it would have cost you $820 to store a gigabyte of data on disk. In 2011 it is just six cents. So we store more and more content. In 2010 the broadcast and media industry alone installed 3.6 exabytes – billion gigabytes – and by 2015 we could be consuming more than 21EB a year.

Some of that will be storage to hand. One of my favourite products at NAB was the SGI ArcFiniti, which is a Maid: a massive array of spinning disks. It gives you archive quantities of storage – 1.4 petabytes in a single rack – with good lifecycle and energy costs because when the disks are not in use they are spun down to idle speeds.

Some of it will be in server farms, providing the raw horsepower for non-broadcast delivery. YouTube plays out close to 150 billion minutes of video a month, which is the equivalent of four million linear television channels. Apple has not told us yet why it is building a server farm – we are guessing it is video distribution – but it has ordered 12 petabytes of EMC Isilon disks for it.

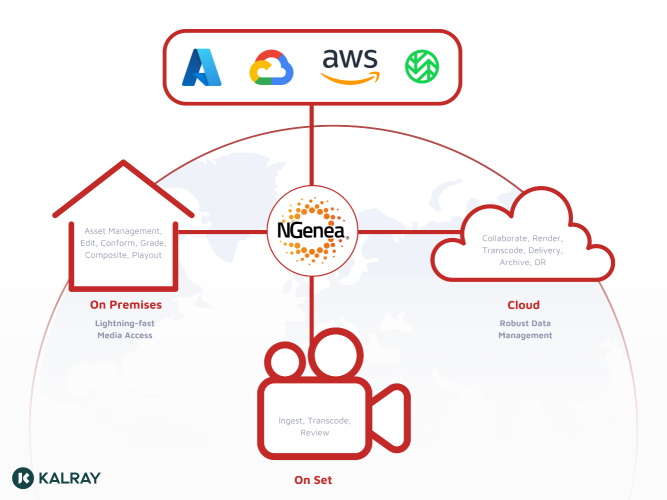

And some of it will be for cloud applications. The broadcast cloud has always been hampered by two issues: our particularly paranoid concern for security, and the ability to get the content to and from the cloud fast enough, given the large files we are dealing with.

Several manufacturers – not least Avid and Quantel – were talking about always being in contact with your content, if you use their cloud solutions. Other people, like XDT, had offerings which speed up the transfer of content around IP circuits.

A recent paper in the journal Nature Photonics claimed to have achieved a data rate of 26 terabits a second down a fibre, using a single multi-coloured laser and some fast Fourier transforms operating optically before the data is decoded. Putting that on the core internet architecture would lift a few bottlenecks. Probably still will not get fibre to my desk in Tunbridge Wells, though.

For now, though, computer memory – on chips and on disk – is helping us work faster, smarter and cheaper. For that we should give thanks.