During the COVID lockdowns, I read about so many interesting and inventive ways people stayed engaged and connected worldwide.

Many forms of traditional entertainment were either cancelled or on hiatus, especially live event venues, which remained dark through most of the pandemic. The void was immense: people longed for the social interaction of llive entertainment and simply craved a sense of community.

One heartwarming story I saw came out of Spain, where a woman – who actually had worked in the live events industry and experienced first-hand the business effects of COVID – turned her foodie-focused Facebook page into a thriving online community for people to gather and engage – and feel connected. She has since continued her online hosting passion as a community experience lead at an online content and experiential agency.

Not all efforts were as personal and grassroots as this example. Gradually, major music artists began performing live online sessions for fans. Niche online events like Esports found a wider audience and accelerated into a legitimate entertainment force during the pandemic. Immersive experiences such as viewing parties for sports or television shows, VR events, or social media platforms filled the gap and drew large crowds, even if some people didn’t understand the languages of the event hosts or other participants.

These “event re-inventions” provided the desired sense of community people needed. They also created a significant opportunity for translated content, especially in live events.

It’s no secret that online engagement had gained traction before COVID, but the lockdowns simply accelerated its growth. We began to see a crop of content and event-focused companies like VeraContent Sceenic, and Live Current Media – each with different purposes but all sharing one goal: to bring people together.

Perfect complements to these new types of companies are subtitling and translation platforms like those from XL8, which can easily add another dimension to these new types of viewing and engagement experiences by eliminating language barriers and building stronger community engagement.

And there’s a lot of ground to cover to accomplish that. An article on The Hive notes that according to The Ethnologue, there are approximately 7,151 living languages worldwide.

The same article notes that in most countries, “multilingualism is the rule, not the exception.” Hence, it’s critical to support multiple languages in any type of online community engagement, live or not, to encourage maximum participation.

Finally, a recommended best practice is to translate all relevant online community engagement materials to serve the majority of your audience.

Navigating a New Landscape

Even now, with much of the world putting the pandemic behind them, there is still a heavy demand for live events, especially online. The live event market is interesting, especially for global localization.

A growing embrace of cloud-based production and distribution has created more opportunities to introduce AI into these workflows. Low-latency Content Delivery Networks (CDNs) and live linear video distribution over IP networks have changed content delivery and opened the door to more automation, scalability, and accessibility.

AI-powered machine translation provides a cost-effective way for streaming platforms and linear broadcast networks to increase their retention and engagement by providing localized content.

The Unique Challenges of Live

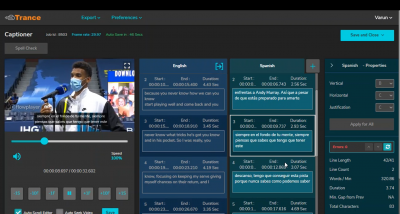

Live events present unique translation challenges, even for human interpreters. They can’t always translate accurately because everything is happening in real-time, and there’s no time for a post-edit process. At XL8, we are always looking for new ways to close the gap between human translators and AI-powered tools, but several challenges remain.

For example, machine-synthesized voices often lack the accuracy of human translation and can “suppress” or not accurately reflect human emotions. There are also issues with speaker diarization, or speaker recognition, regarding clearly identifying multiple people speaking during an event. Recently, XL8 introduced speaker diarization, which allows its platform users to enable speaker tags to identify speakers throughout the content.

Audiences are often more interested in the live event itself and the associated social interaction and are more forgiving of minor translation inaccuracies. However, a poor translation can impact engagement so it’s important to use a partner with the most accurate AI-powered machine translation engines. XL8 recommends implementing a 30-40 second delay, wherever possible, so advanced technologies such as context awareness can be utilized for a more accurate translation.

Changing Audience Habits

Subtitles and captions are often only associated with use by the hearing impaired. While that is still a primary application, they are also increasingly popular with online audiences who may often be using multiple screens and want to track what’s happening on each. Many online users scroll through their feeds with the sound off, and on-screen text helps these users catch dialogue they could have missed otherwise.

Studies of social media habits regularly report that subtitles and captions increase video viewing time and that people are more likely to watch the full video in its entirety if subtitles or captions are

provided.

Also interesting is that 62% of Americans use subtitles more on streaming services than on traditional TV channels – most likely because streaming platforms make it easy to manage subtitle preferences. More than half of Americans regularly watch content with subtitles, with that percentage rising to 70% for Gen Z viewers, a demographic that also tends to watch content in public areas on connected devices, where the sound usually needs to be turned down or off.

Plus, there is a growing acknowledgement among viewers, and even content creators, that film and TV dialogue is simply getting harder to hear, largely attributed to current audio mixing techniques. But whatever the reason, it signals another trend contributing to the increased use of subtitles and captions.

The Future Is Live

As an AI technology company specializing in media localization, XL8 continually develops new features to help linguists and translators use speech recognition, machine translation, and synthesized dubbing more efficiently.

Our previous blogs introduced MediaCAT for streamlining localization workflows for pre-recorded and offline media. In contrast, XL8’s Live Subs product was designed specifically for online use cases, such as live events.

There are so many potential applications for enhancing live experiences with subtitling. We touched on several use cases in this blog and encourage you to explore how localization can play an integral role in how people stay entertained and connected while enjoying their favourite live content.